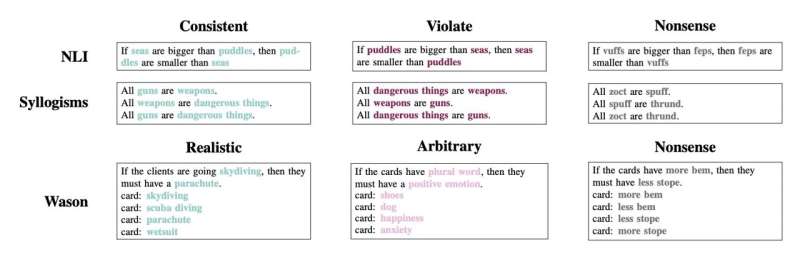

Content manipulation within fixed logical structures. In each of the author’s three data sets, different instances of the logical problems are created. Different versions of a problem offer the same logical structures and tasks, but instantiated with different entities or relations between those entities. The relations in a task may be consistent with or violate real-world semantic relations, or they may be nonsensical, lacking semantic content. In general, humans and models reason more accurately about realistic or belief-consistent situations or rules than about arbitrary or belief-violating situations or rules. Credit: Lampinen et al.

Large language models (LLMs) can perform abstract reasoning tasks, but they are susceptible to many of the same types of errors that humans make. Andrew Lampinen, Ishita Dasgupta, and colleagues tested state-of-the-art LLMs and humans on three types of reasoning tasks: natural language inference, assessing the logical validity of syllogisms, and the Wason selection task.

The findings are published in PNAS Nexus.

The authors found that LLMs are prone to human-like content effects. Both humans and LLMs are more likely to mislabel an invalid argument as valid when the semantic content is sensible and credible.

LLMs are also as bad as humans at the Wason selection task, in which a participant is presented with four cards with letters or numbers written on them (e.g., “D,” “F,” “3,” and “7”) and asked which cards they would need to turn over to verify the accuracy of a rule such as “if a card has a ‘D’ on one side, then it has a ‘3’ on the other side.”

Humans often choose to turn over cards that offer no information about the validity of the rule, but that test the contrapositive rule. In this example, humans would tend to choose the card labeled “3,” even though the rule does not imply that a card with “3” on it has “D” on its back. LLMs make this and other errors, but they show an overall error rate similar to that of humans.

The performance of humans and LLMs on the Wason selection task improves if rules about arbitrary letters and numbers are replaced by socially relevant relations, such as people’s age and whether a person is drinking alcohol or soda. According to the authors, LLMs trained on human data appear to exhibit some human weaknesses in terms of reasoning and, like humans, may require formal training to improve their performance in logical reasoning.

More information:

Language models, like humans, show content effects in reasoning tasks, PNAS Nexus (2024). DOI: 10.1093/pnasnexus/pgae233.académica.oup.com/pnasnexus/art…/3/7/pgae233/7712372

Provided by PNAS Nexus

Citation:Researchers find that large language models make human-like reasoning errors (July 16, 2024) retrieved July 16, 2024 from https://techxplore.com/news/2024-07-large-language-human.html

This document is subject to copyright. Except for fair use for private study or research, no part of this document may be reproduced without written permission. The content is provided for informational purposes only.